system.file(package = "dslabs")

#> [1] "/Library/Frameworks/R.framework/Versions/4.5-arm64/Resources/library/dslabs"6 Importing data

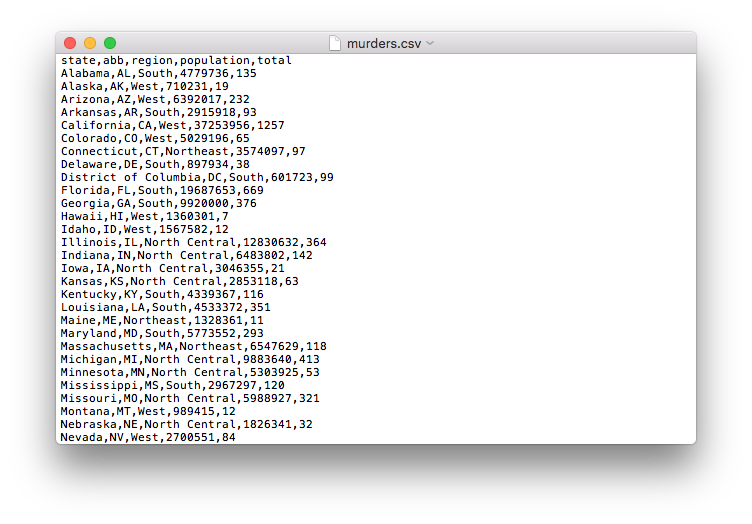

We have been using datasets already stored as R objects. In data analysis work we rarely have such luck and will have to import data into R from either a file, a database, or another source. Currently, one of the most common ways of storing and sharing data for analysis is through electronic spreadsheets. A spreadsheet stores data in rows and columns. It is basically a file version of a data frame. When saving such a table to a computer file, one needs a way to define when a new row or column ends and the other begins. This in turn defines the cells in which single values are stored. Here is an example of what a comma separated file looks like if we open it with a basic text editor:

In this chapter, we outline how to load data from a file into R. First, it’s crucial to identify the file’s location; thus, we touch on file paths and working directories (detailed in Chapter 18). Next, we delve into file types (text or binary) and encodings (like ASCII and Unicode), both essential for data import. We then introduce popular functions for data importing, referred to as parsers. Lastly, we offer tips on how to store data in spreadsheets. Advanced topics like extracting data from websites or PDFs will be discussed in the book’s Data Wrangling section.

6.2 File types

For most data analysis applications, files can generally be classified into two categories: text files and binary files. In this section we describe the most widely used format for both these types and the best way to identify them. In the last subsection we describe the importance of knowing the file encoding.

For this and the following section we assume you have copied the murders.csv file into your working directory. You can use the code at the end of the previous section to do this.

6.2.1 Text files

You have already worked with text files. All your R scripts and Quarto files, for example, are text files and so are the Quarto files used to create this book. The murders.csv file mentioned above is also text files. One big advantage of these files is that we can easily “look” at them without having to purchase any kind of special software or follow complicated instructions. Any text editor can be used to examine a text file, including freely available editors such as RStudio or nano. To see this, try opening a csv file using the “Open file” RStudio tool. You should be able to see the content right on your editor.

When text files are used to store a spreadsheet, line breaks are used to separate rows and a predefined character, referred to as the delimiter, is used to separate columns within a row. The most common delimiters are comma (,), semicolon (;), space (), and tab (a preset number of spaces or \t). Slightly different approaches are used to read these files into R, so we need to know what delimiter was used. In some cases, the delimiter can be inferred from file suffix. For example, files ending in csv or tsv are expected to be comma and tab delimited, respectively. However, it is harder to infer the delimiter for files ending in txt. As a result we recommend looking at the file rather than inferring from the suffix. You can look at any number of lines from within R using the readLines function:

readLines("murders.csv", n = 3)

#> [1] "state,abb,region,population,total"

#> [2] "Alabama,AL,South,4779736,135"

#> [3] "Alaska,AK,West,710231,19"This immediately reveals that the file is indeed comma delimited. It also reveals that the file has a header: the first row contains column names rather than data. This is also important to know. Most parsers assume the file starts with a header, but not all files have one.

6.2.2 Binary files

Opening image files such as jpg or png in a text editor or using readLines in R will not show comprehensible content because these are binary files. Unlike text files, which are designed for human readability and have standardized conventions, binary files can adopt numerous formats specific to their data type. While R’s readBin function can process any binary file, interpreting the output necessitates a thorough understanding of the file’s structure. This intricate topic isn’t covered in this book. Instead, we concentrate on the prevalent binary formats for spreadsheets: Microsoft Excel’s xls and xlsx.

6.2.3 Encoding

A frequent issue when importing data, whether text or binary, is incorrectly identifying the file’s encoding. At its core, a computer translates everything into sequences of 0s and 1s. ASCII is an encoding system that assigns specific numbers to characters. Using 7 bits, ASCII can represent \(2^7 = 128\) unique symbols, sufficient for all English keyboard characters. However, many global languages contain characters outside ASCII’s range. For instance, the é in “México” isn’t in ASCII’s catalog. To address this, broader encodings, such as Unicode, emerged. Unicode offers variations using 8, 16, or 32 bits, known as UTF-8, UTF-16, and UTF-32. RStudio typically uses UTF-8 as its default. Notably, ASCII is a subset of UTF-8, meaning that if a file is ASCII-encoded, presuming it’s UTF-8 encoded won’t cause issues. However, there other encodings, such as ISO-8859-1 (also known as Latin-1) developed for the western European languages, Big5 for Traditional Chinese, and ISO-8859-6 for Arabic.

The dslabs package includes a file that is not UTF-8 encoded to serve as an example. Notice the strange characters that appear you attempt to read in the first line:

fn <- "calificaciones.csv"

file.copy(file.path(system.file("extdata", package = "dslabs"), fn), fn)

#> [1] TRUE

readLines(fn, n = 1)

#> [1] "\"nombre\",\"f.n.\",\"estampa\",\"puntuaci\xf3n\""In the following section, we’ll introduce several helpful import functions, some of which allow you to specify the file encoding.

6.3 Parsers

Importing functions, or parsers, are available from base R. However, more powerful and often faster functions are available in the readr, readxl, and data.table packages. In this section we review some examples. We also describe how data can be downloaded or read directly from the internet.

6.3.1 Base R

Base R provides several file parsers for example read.csv, read.table and read.delim. The first argument can take either a full or relative path. If a relative path is provided, the parser assumes you want to search in the working directory. Therefore, to read the murders.csv file previously copied to our working directory, we can simply type:

dat <- read.csv("murders.csv")An often useful R-base importing function is scan, as it provides much flexibility. When reading in spreadsheets many things can go wrong. The file might have multiline headers or be missing cells. With experience you will learn how to deal with different challenges. Carefully reading the help files for the functions discussed here will be useful. With scan you can read-in each cell of a file. Here is an example:

x <- scan("murders.csv", sep = ",", what = "c")

x[1:10]

#> [1] "state" "abb" "region" "population" "total"

#> [6] "Alabama" "AL" "South" "4779736" "135"6.3.2 readr

The readr package includes parsers, for reading text file spreadsheets into R. readr is part of the tidyverse, but you can load it directly using:

The following functions are available to read-in text file spreadsheets:

| Function | Format | Typical suffix |

|---|---|---|

| read_table | white space separated values | txt |

| read_csv | comma separated values | csv |

| read_csv2 | semicolon separated values | csv |

| read_tsv | tab delimited separated values | tsv |

| read_delim | general text file format, must define delimiter | txt |

It also includes read_lines with similar functionality to readLines.

We can read in the murders.csv file using

dat <- read_csv("murders.csv")

#> Rows: 51 Columns: 5

#> ── Column specification ────────────────────────────────────────────────

#> Delimiter: ","

#> chr (3): state, abb, region

#> dbl (2): population, total

#>

#> ℹ Use `spec()` to retrieve the full column specification for this data.

#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.Note that we receive a message letting us know what data types were used for each column. Also note that dat is a tibble, not just a data frame. We can suppress this message using the argument show_col_types = FALSE.

The readr parsers permit us to specify an encoding. It also includes a function that tries to guess the encoding:

guess_encoding("murders.csv")

#> # A tibble: 1 × 2

#> encoding confidence

#> <chr> <dbl>

#> 1 ASCII 1This function can help us read the file we previously noted was showing strange characters:

guess_encoding("calificaciones.csv")

#> # A tibble: 3 × 2

#> encoding confidence

#> <chr> <dbl>

#> 1 ISO-8859-1 0.92

#> 2 ISO-8859-2 0.72

#> 3 ISO-8859-9 0.53Once we know the encoding we can specify it through the locale argument:

We learn about locales in Chapter 14.

We can now see that the characters in the header were read in correctly:

names(dat)

#> [1] "nombre" "f.n." "estampa" "puntuación"6.3.3 readxl

The readxl package provides functions to read-in Microsoft Excel formats.

The main functions are:

| Function | Format | Typical suffix |

|---|---|---|

| read_excel | auto detect the format | xls, xlsx |

| read_xls | original format | xls |

| read_xlsx | new format | xlsx |

The Microsoft Excel formats permit you to have more than one spreadsheet in one file. These are referred to as sheets. The functions listed above read the first sheet by default, but we can also read the others. The excel_sheets function gives us the names of all the sheets in an Excel file. These names can then be passed to the sheet argument in the three functions above to read sheets other than the first.

6.3.4 data.table

The data.table package provides the fread function, a powerful and fast utility designed for reading large datasets. fread automatically detects the format of the input, whether it’s delimited text or even files compressed in formats like gzip or zip. It offers a significant speed advantage over the other parsers described here, especially for large files.

library(data.table)

dat <- fread("murders.csv")Note fread returns a data.table object.

6.3.5 Downloading files

A common place for data to reside is on the internet. When these data are in files, we can download them and then import them or even read them directly from the web. For example, we note that because our dslabs package is on GitHub, the file we downloaded with the package has a url:

url <- paste0("https://raw.githubusercontent.com/",

"rafalab/dslabs/master/inst/extdata/murders.csv")Most parsers can read these files directly:

dat <- read.csv(url)If you want to have a local copy of the file, you can use the download.file function:

download.file(url, "murders.csv")This will download the file and save it on your system with the name murders.csv. You can use any name here, not necessarily murders.csv.

The function download.file overwrites existing files without warning.

Two functions that are sometimes useful when downloading data from the internet are tempdir and tempfile. The first creates a directory with a random name that is very likely to be unique. Similarly, tempfile creates a character string, not a file, that is likely to be a unique filename. So you can run a command like this which erases the temporary file once it imports the data:

tmp_filename <- tempfile()

download.file(url, tmp_filename)

dat <- read_csv(tmp_filename)

file.remove(tmp_filename)6.4 Organizing data with spreadsheets

Although this book focuses almost exclusively on data analysis, data management is also an important part of data science operations. As explained in the introduction, we do not cover this topic. However, quite often data analysts need to collect data, or work with others collecting data, in a way that is most conveniently stored in a spreadsheet. Although filling out a spreadsheet by hand is a practice we highly discourage, and we instead recommend the process be automatized as much as possible, sometimes you just have to do it. Therefore, in this section, we provide recommendations on how to organize data in a spreadsheet. Although there are R packages designed to read Microsoft Excel spreadsheets, we generally want to avoid this format. Instead, we recommend Google Sheets as a free software tool. Below we summarize the recommendations made in paper by Karl Broman and Kara Woo1. Please read the paper for important details.

- Be Consistent - Before you commence entering data, have a plan. Once you have a plan, be consistent and stick to it.

-

Choose Good Names for Things - You want the names you pick for objects, files, and directories to be memorable, easy to spell, and descriptive. This is actually a hard balance to achieve and it does require time and thought. One important rule to follow is do not use spaces, use underscores

_or dashes instead-. Also, avoid symbols; stick to letters and numbers. - Write Dates as YYYY-MM-DD - To avoid confusion, we strongly recommend using this global ISO 8601 standard.

- No Empty Cells - Fill in all cells and use some common code for missing data.

- Put Just One Thing in a Cell - It is better to add columns to store the extra information rather than having more than one piece of information in one cell.

- Make It a Rectangle - The spreadsheet should be a rectangle.

- Create a Data Dictionary - If you need to explain things, such as what the columns are or what the labels used for categorical variables are, do this in a separate file.

- No Calculations in the Raw Data Files - Excel permits you to perform calculations. Do not make this part of your spreadsheet. Code for calculations should be in a script.

- Do Not Use Font Color or Highlighting as Data - Most import functions are not able to import this information. Encode this information as a variable instead.

- Make Backups - Make regular backups of your data.

- Use Data Validation to Avoid Errors - Leverage the tools in your spreadsheet software so that the process is as error-free and repetitive-stress-injury-free as possible.

- Save the Data as Text Files - Save files for sharing in comma or tab delimited format.

6.5 Exercises

1. Use the read_csv function to read each of the files that the following code saves in the files object:

path <- system.file("extdata", package = "dslabs")

files <- list.files(path)

files2. Note that the the olive file, gives us a warning. This is because the first line of the file is missing the header for the first column.

Read the help file for read_csv to figure out how to read in the file without reading this header. If you skip the header, you should not get this warning. Save the result to an object called dat.

3. A problem with the previous approach is that we don’t know what the columns represent. Type:

names(dat)to see that the names are not informative.

Use the readLines function to read in just the first line.

4. Pick a measurement you can take on a regular basis. For example, your daily weight or how long it takes you to run 5 miles. Keep a spreadsheet that includes the date, the hour, the measurement, and any other informative variable you think is worth keeping. Do this for 2 weeks. Then make a plot.